Yak Shaving

Benjamin Smedberg wrote about shaving yaks a few weeks ago. This is an experience all programmers (and probably most other professions!) encounter regularly, and yet it still baffles and frustrates us!

Just last week I got tangled up in a herd of yaks.

I started the week wanting to deploy the new bundleclone extension that :gps has been working on. If you're not familiar with bundleclone, it's a combination of server and client side extensions to mercurial that allow the server to advertise an externally hosted static bundle to clients requesting full clones. hg.mozilla.org advertises bundles hosted on S3 for certain repositories. This can significantly reduce load on the mercurial servers, something that has caused us problems in the past!

With the initial puppet patch written and sanity-checked, I wanted to verify it worked on other platforms before deploying it more widely.

Linux...looks ok. I was able to make some minor tweaks to existing puppet modules to make testing a bit easier as well. Always good to try and leave things in better shape for the next person!

OSX...looks ok...er, wait! All our test jobs are re-cloning tools and mozharness for every job! What's going on? I'm worried that we could be paying for unnecessary S3 bandwidth if we don't fix this.

I find bug 1103700 where we started deploying and relying on machine-wide caches of mozharness and tools. Unfortunately this wasn't done on OSX yet. Ok, time to file a new bug and start a new puppet branch to get these shared repositories working on OSX. The patch is pretty straightforward, and I start testing it out.

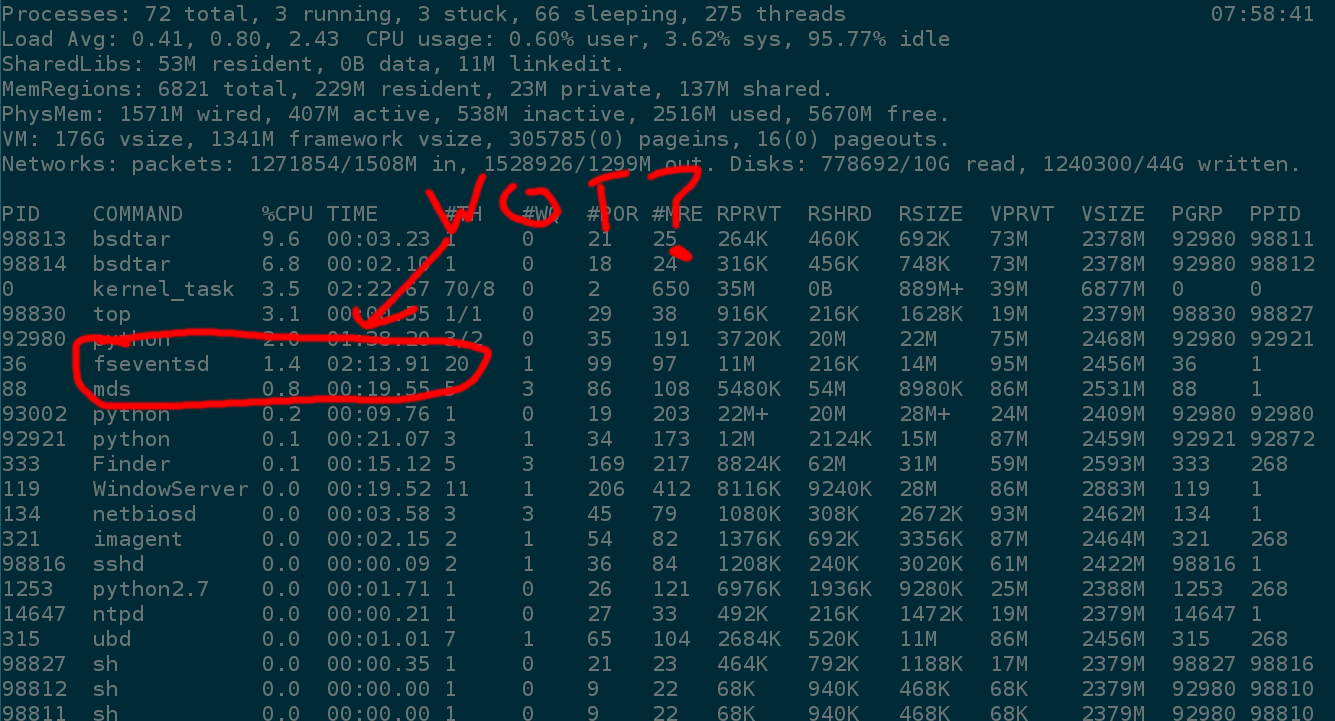

Because these tasks run at boot as part of runner, I need to be watching logs on the test machines very early on in the bootup process. Hmmm, the machine seems really sluggish when I'm trying to interact with it right after startup. A quick glance at top shows something fishy:

There's a system daemon called fseventsd taking a substantial amount of

system resources! I wonder if this is causing any impact to build times.

After filing a new bug, I do a few web searches to see if this

service can be disabled. Most of the articles I find suggest to write a

no_log file into the volume's .fseventsd directory to disable

fseventds from writing out event logs for the volume. Time for another

puppet branch!.

And that's the current state of this project! I'm currently running testing

the no_log fix to see if it impacts build times, or if it causes other

problems on the machines. I'm hoping to deploy the new runner tasks this

week, and switch our buildbot-configs and mozharness configs to make use of

them.

And finally, the first yak I started shaving could be the last one I finish shaving; I'm hoping to deploy the bundleclone extension this week as well.

Comments